MLOps(Machine Learning Operations)

In this article, we will see what is MLOps and the tools to aid in Data Science and ML projects using the MLOps approach. Data scientists, Data engineers, and machine learning engineers need specific tools for building, deploying and monitoring these projects end-to-end.

We’ll be going through several tools in detail, along with their key components and features. let's start!

MLOps

using my own understanding, MLOps is an architecture for model development lifecycle(MDLC). this architecture enables data scientists, data engineers, and machine learning engineers to collaborate in order to increase the speed and process of model development, alongside the continuous integration and continuous deployment (CI/CD) of the model with proper tracking, monitoring of the model behavior and performance.

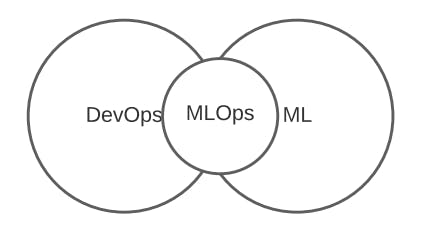

it is the combination of DevOps and Machine Learning that is:

Machine Learning- in the sense of using machine learning algorithms to train and develop ML models.

DevOps- in the sense that the developed ML model is continuously integrated, deployed, and at the same time monitored

ML Tools

Certain tools are required to aid in this model development lifecycle using MLOps architecture, these tools are:

- kubeflow-it is a platform that creates a pipeline to orchestrate our machine learning operation. and then run that pipeline on Kubernetes.

by pipeline I mean from model cleaning to model training and prediction(i.e doing data processing, then using a machine-learning algorithm to train a model and deploying it to a machine-learning serving system like Tensorflow serving)

Kubernetes- it is a platform for orchestrating containerized applications. A containerized application is one that contains all the code, dependencies, and runtime that power it.

MLflow-it is a platform that manages the complete machine learning development lifecycle from model training to production. it has the capability to keep track of your ML model during training and while running, along with the capability to store models, load models in production code.

this capability of MLflow is possible through its core components like;

MLflow Tracking-it records an experiment's code, data, config, and result.

MLflow projects-it packages ML code in a format that can run on any platform.

MLflow models-it is used to package or store machine learning models.

Apache Spark-it is a data processing engine for carrying out large-scale data processing and machine learning predictive analytics. this is achievable through using some of its components like:

Spark sql- it is suitable for working with structural datasets.

Spark MLlib-it contains ml tools for carrying out predictive analytics.

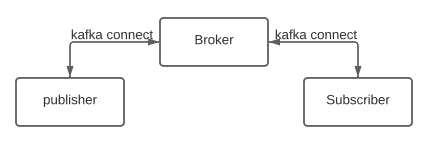

Apache Kafka- it is a highly scalable and distributed platform for collecting, processing, recording, and distributing messages(event or log) in real-time among external systems(publisher and subscriber)

it makes this possible through the use of its components.

Kafka connect-it is one of the apache Kafka components that get it connected with an external system(it is in charge of inputting and outputting messages to external systems)

Kafka stream- it is a Java API that handles all of the heavy work of the message processing.

ksqlDB- it is used for querying messages which can be used for real-time analysis of the message.

Data Lake-it is a system that allows us to store data of any kind(both structured and unstructured data) which can at the same time be accessible or retrievable for data processing, real-time analytics, and machine learning predictive analytics( eg of data lake include: AWS s3 bucket, Delta lake, Google cloud storage, Azure blob storage e.t.c)

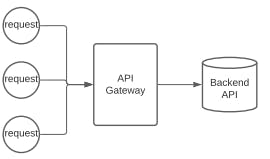

API Gateway-it is a programmed interface that sits in front of an API and it is the only entry point to that API, which may be a backend application, which can be monitored and controlled(since it is programmed).

- Detectron- it is a powerful object detection library that is powered by the PyTorch framework, this library goes beyond mere objects detection, it has capabilities to detect and segment objects.